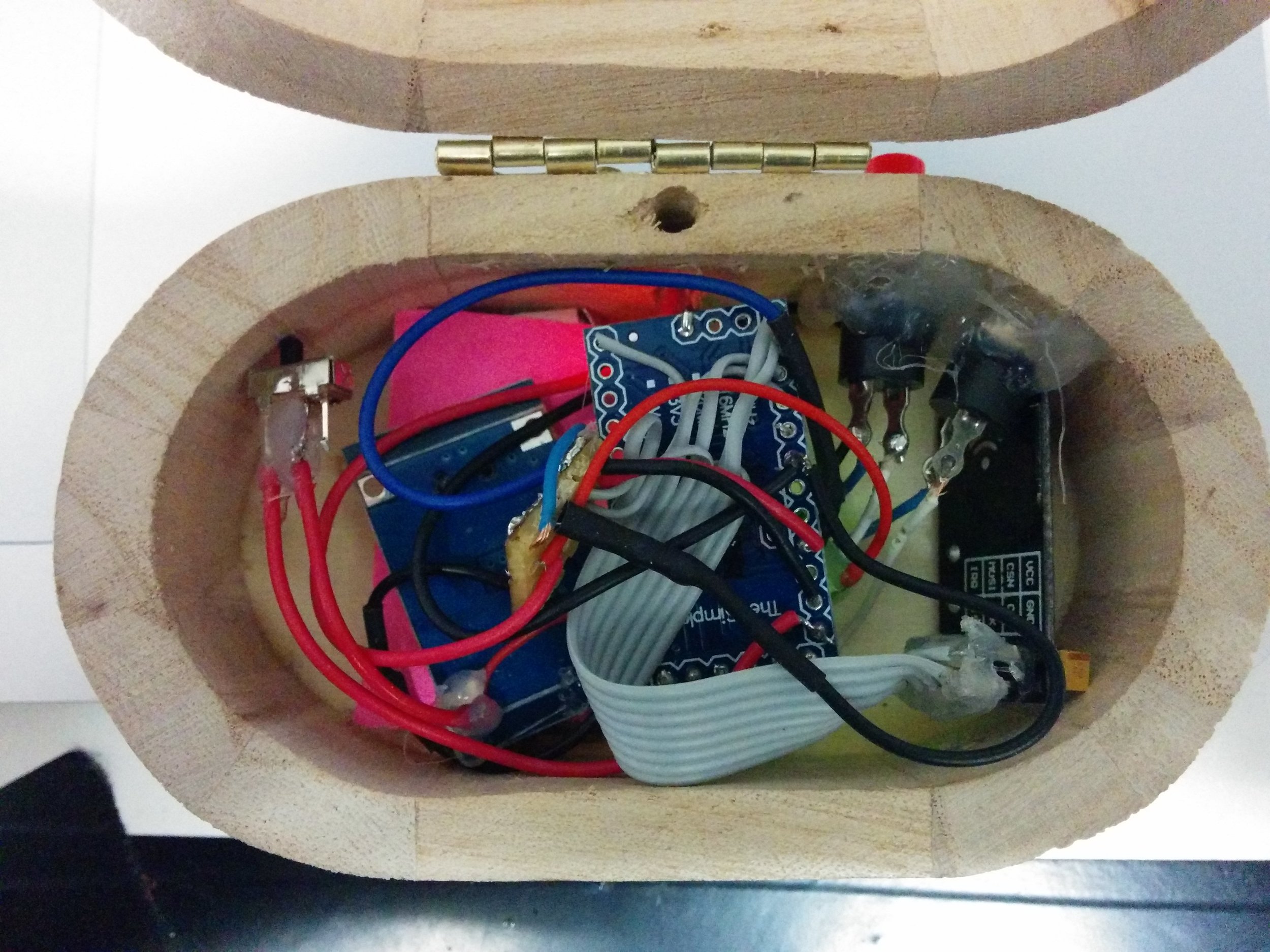

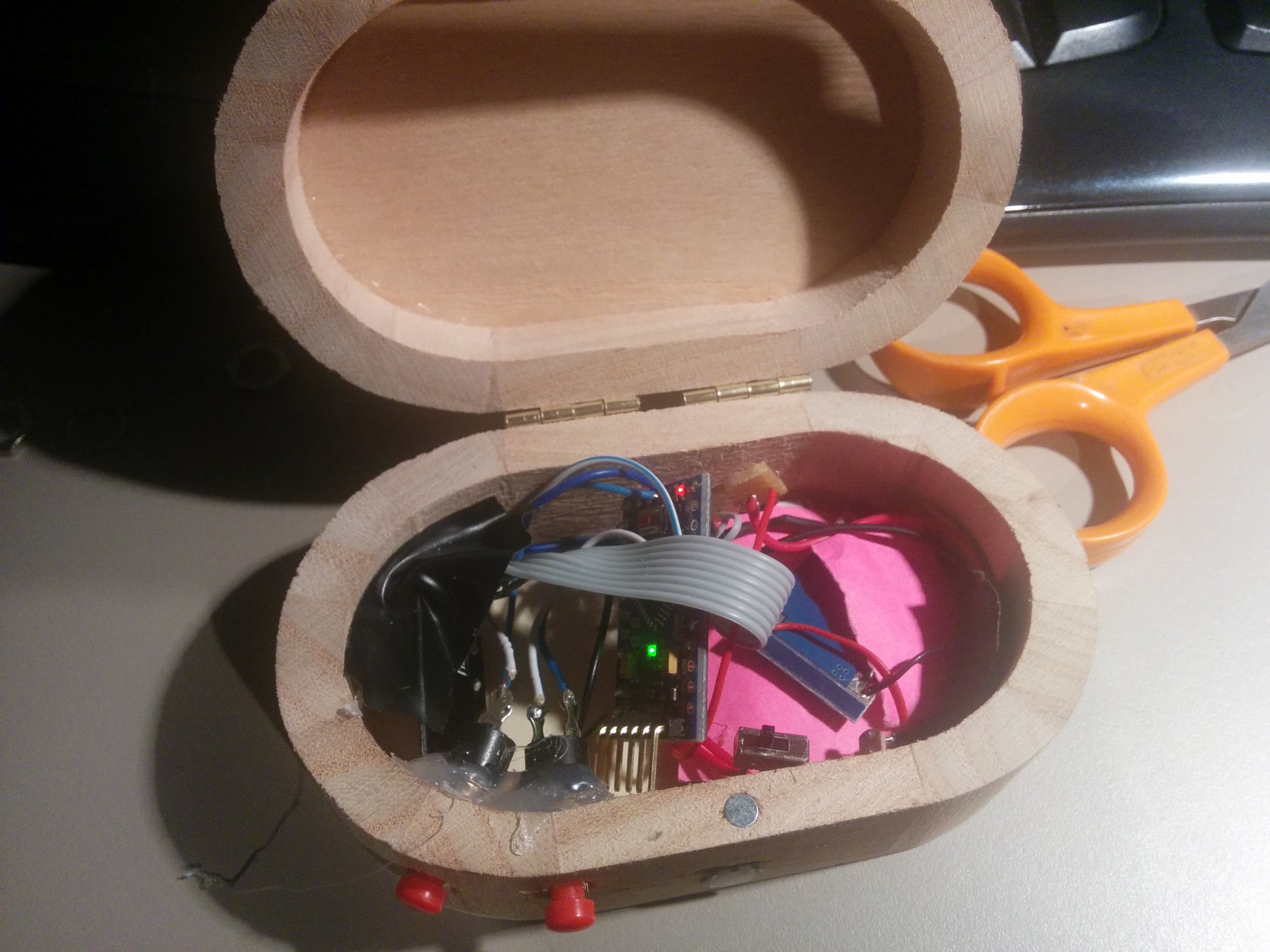

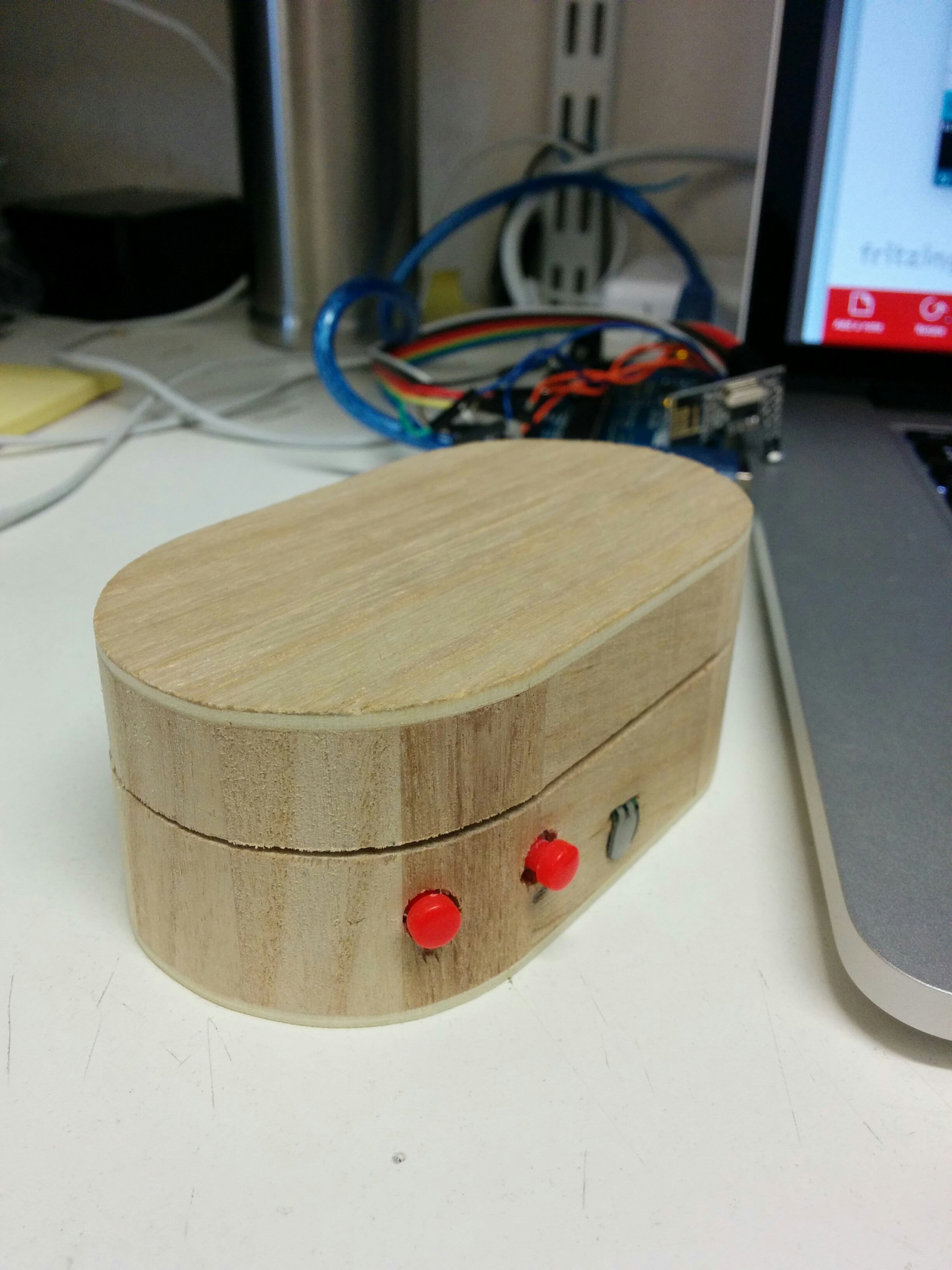

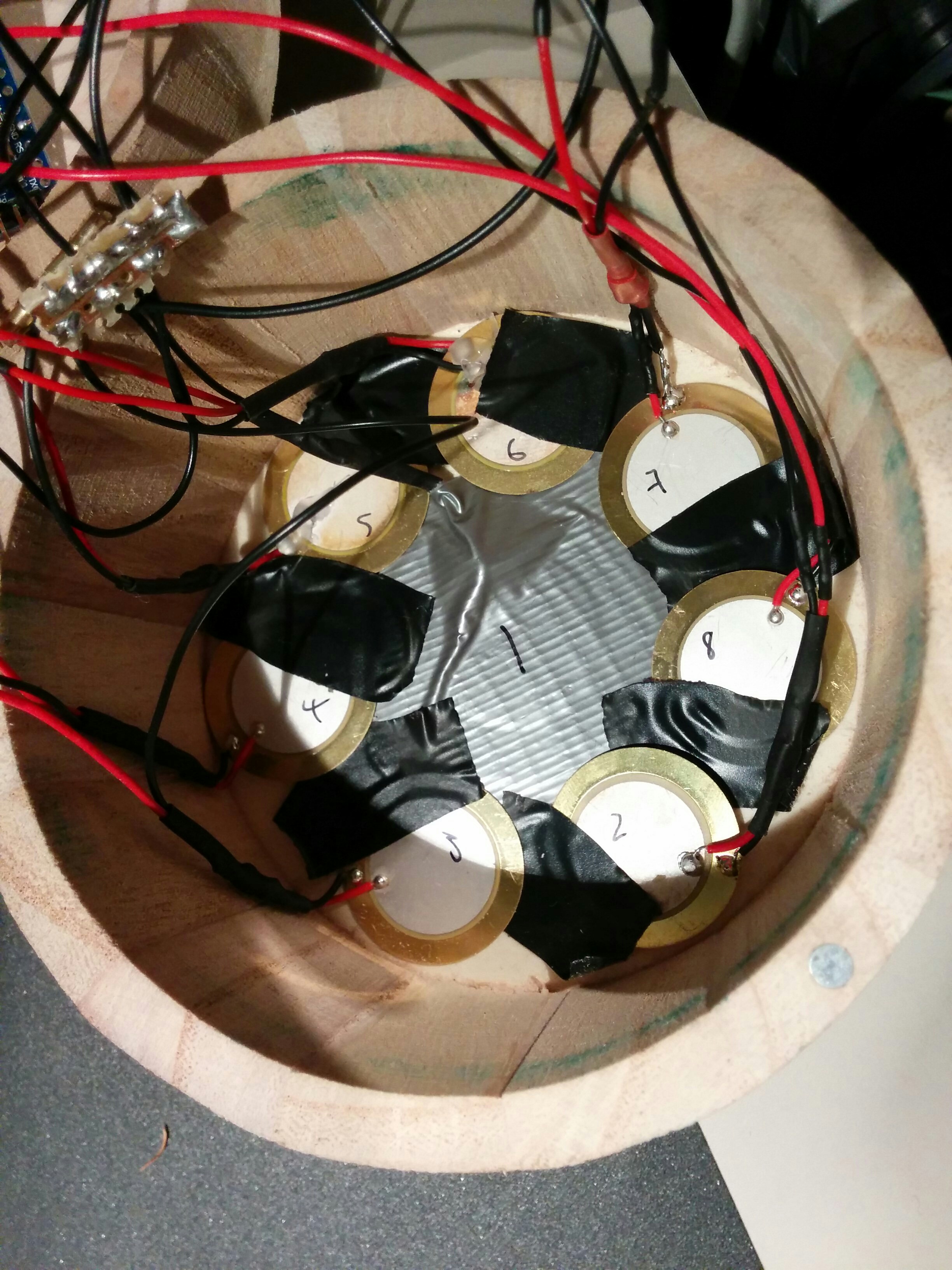

We have been having a bit of a hiatus from developing hardware for the orchestra project at Threeways School for multiple reasons. On re-engaging with the project we started examining the issues we often have with developing accessible instruments. One of the main things we focussed on was the fact that we always have to create new software when we want to try out some hardware, whether it be an existing device or something bespoke. Wouldn't it be great if we had some kind of modular system that we could plug any bit of hardware into and map its controls to various sound generators/effectors, parameters etc... To some extent with a Max patch to read the incoming control data and a Live template to do something with the data, you can do this. However, if we want to make stuff that can be accessed by anyone, or even stuff that is built in to an embedded system then we may want to avoid using Live and create something that can work as a Max read only patch.

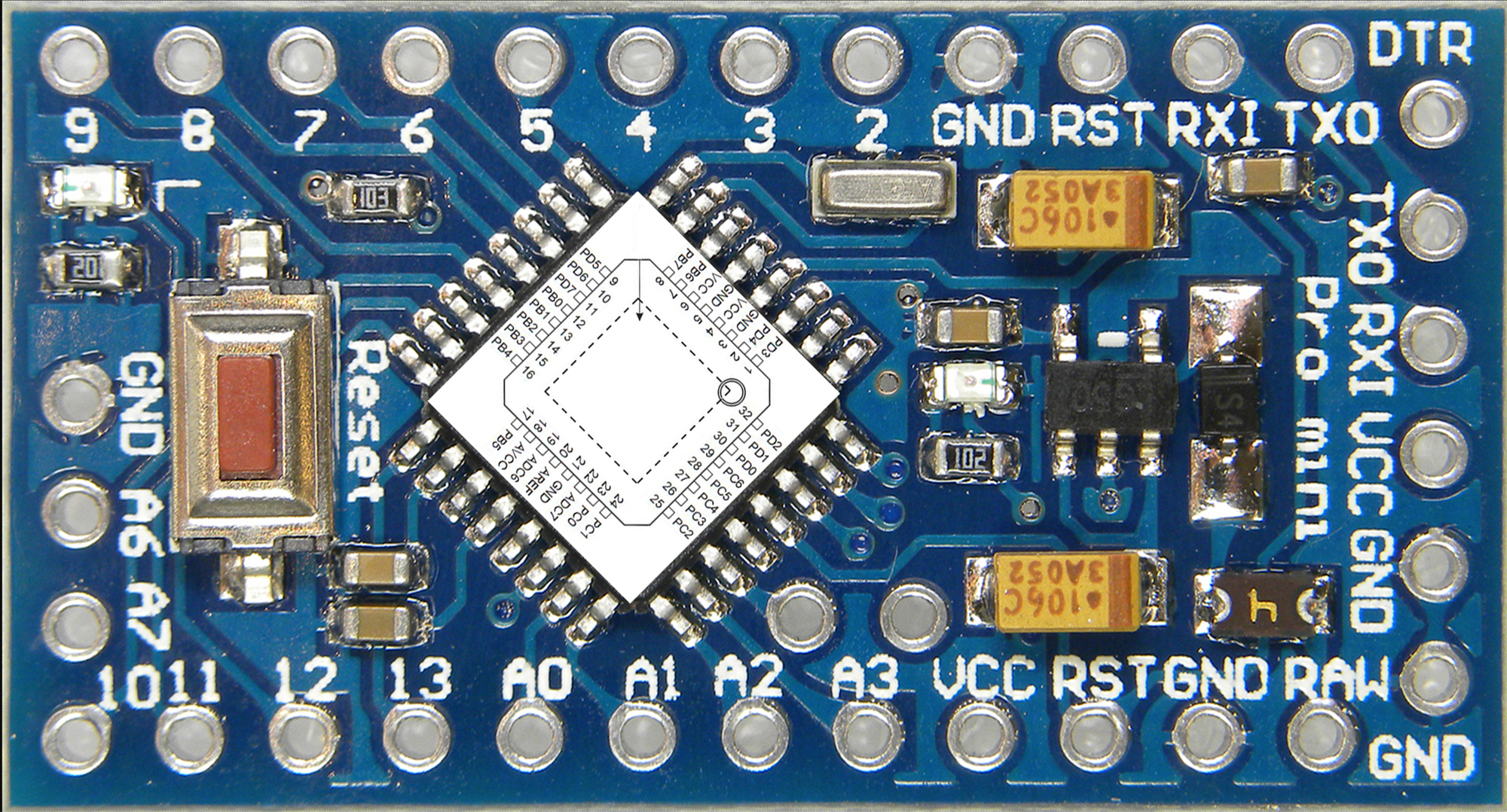

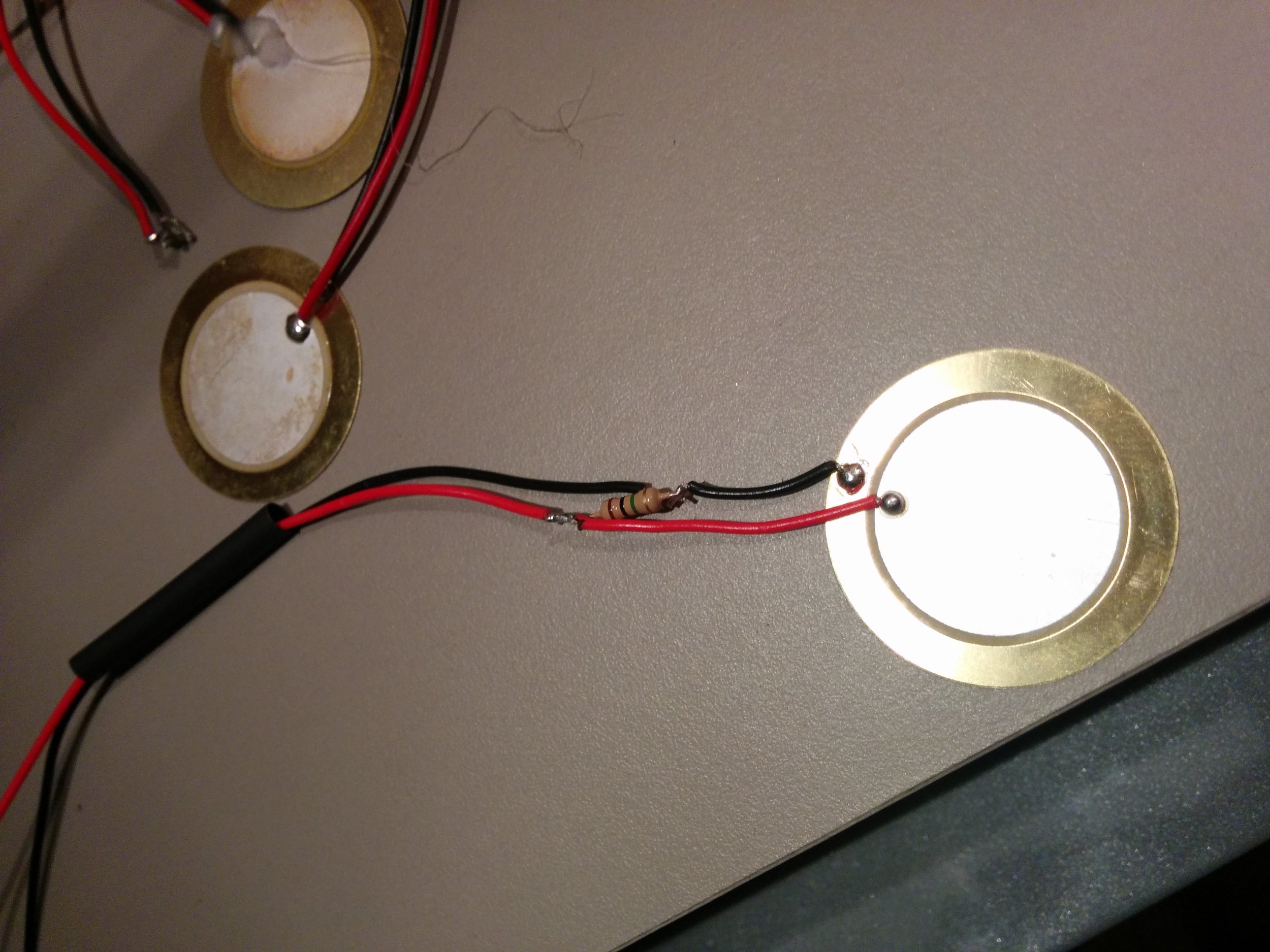

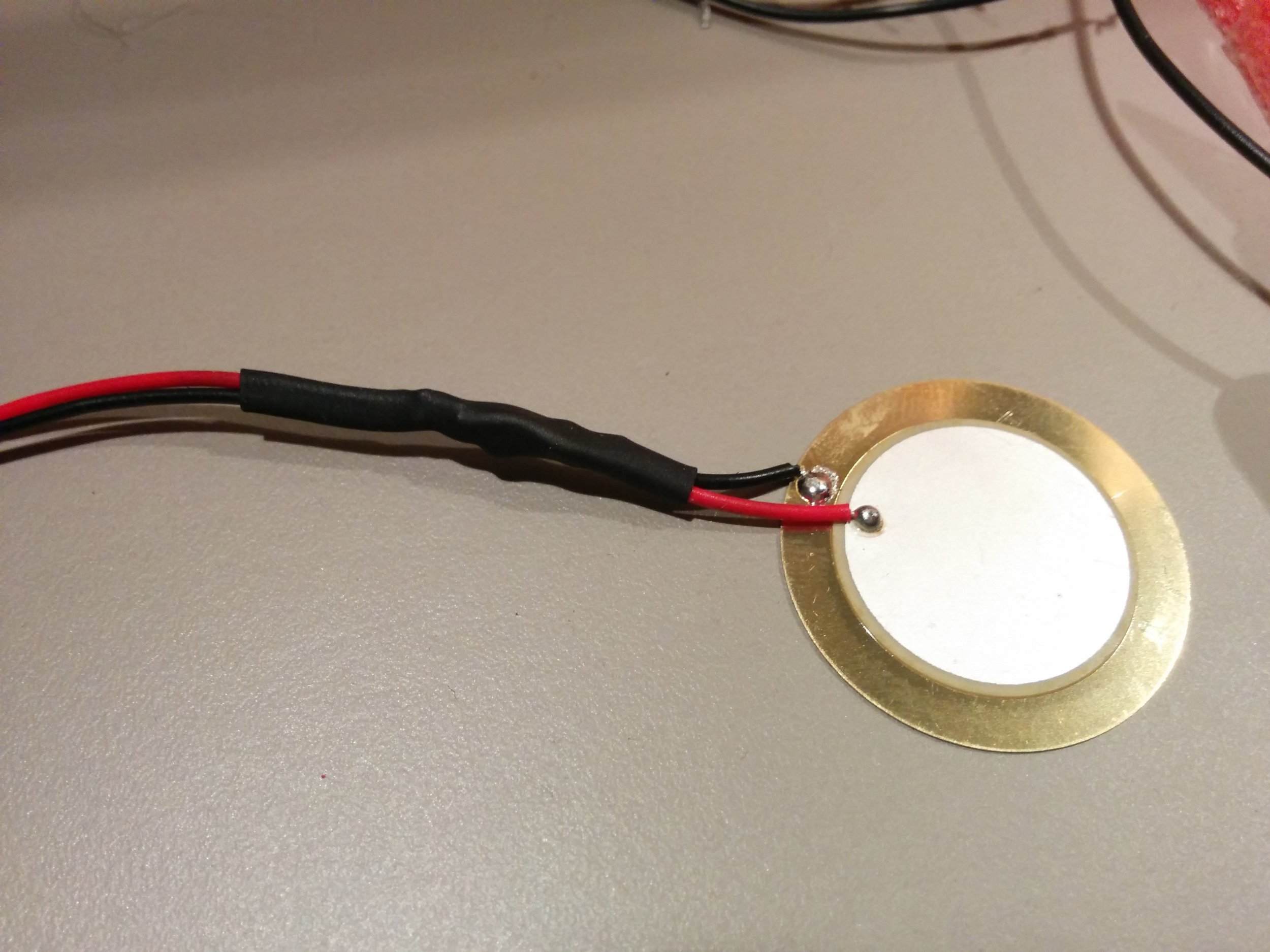

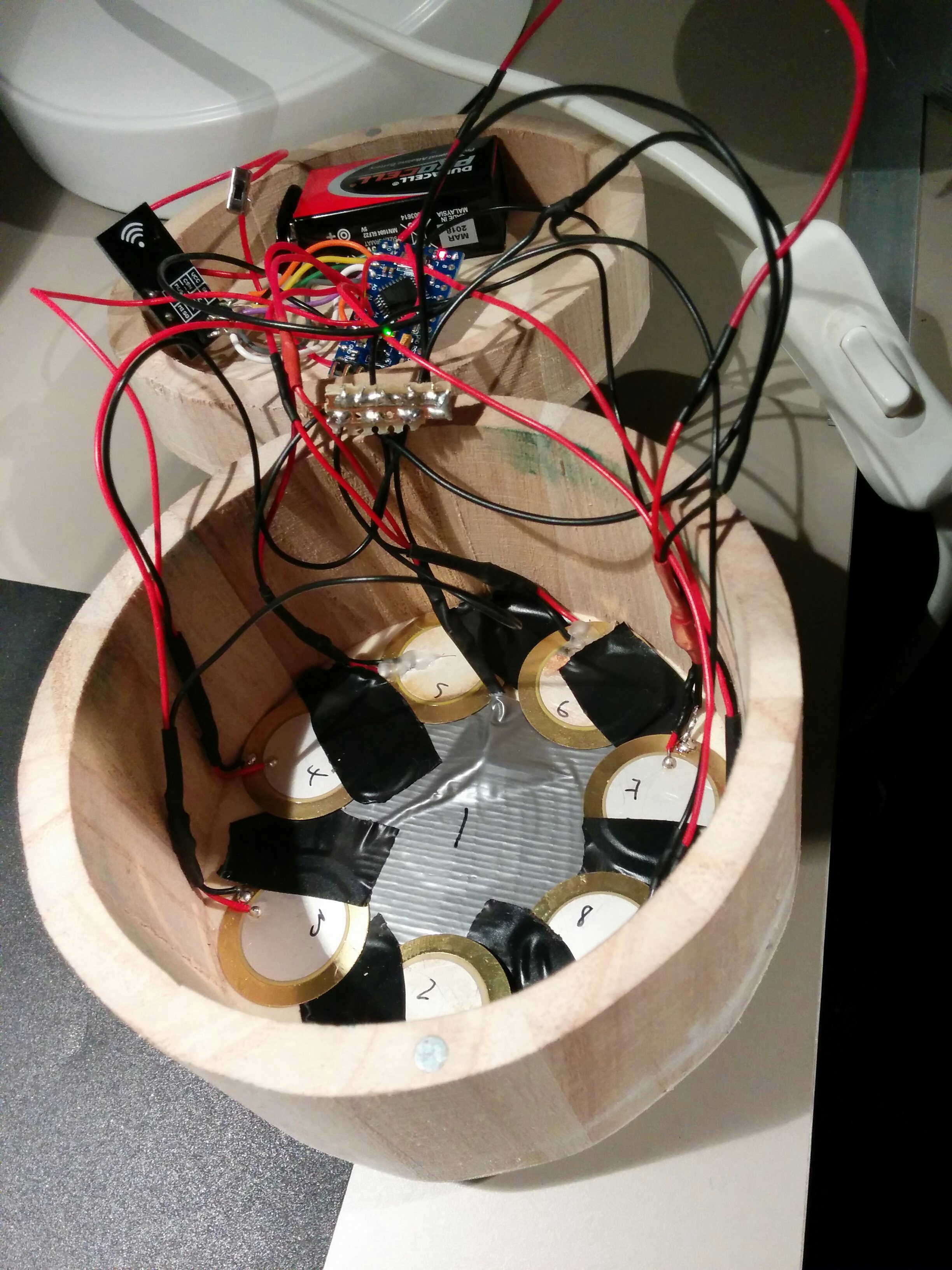

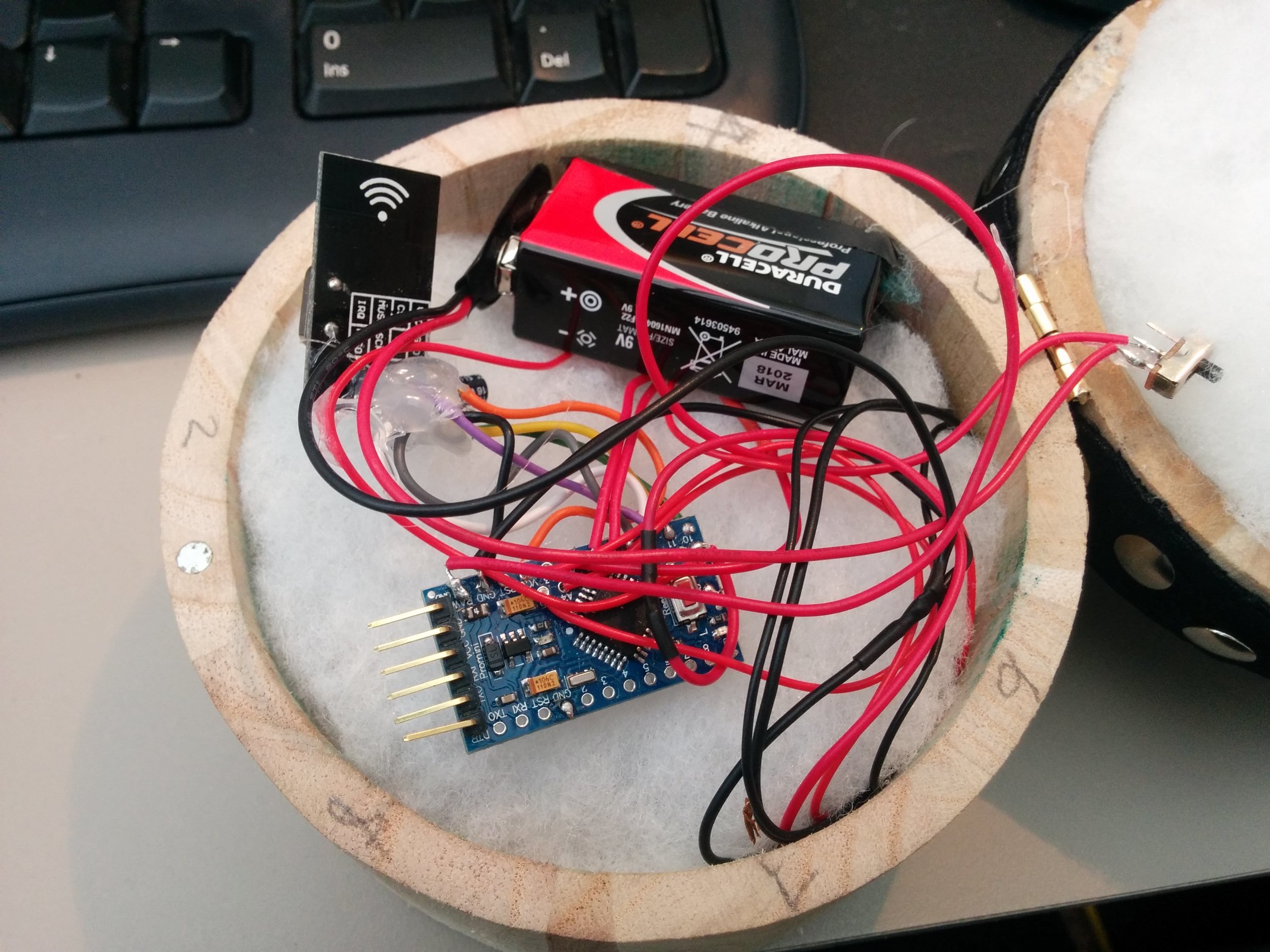

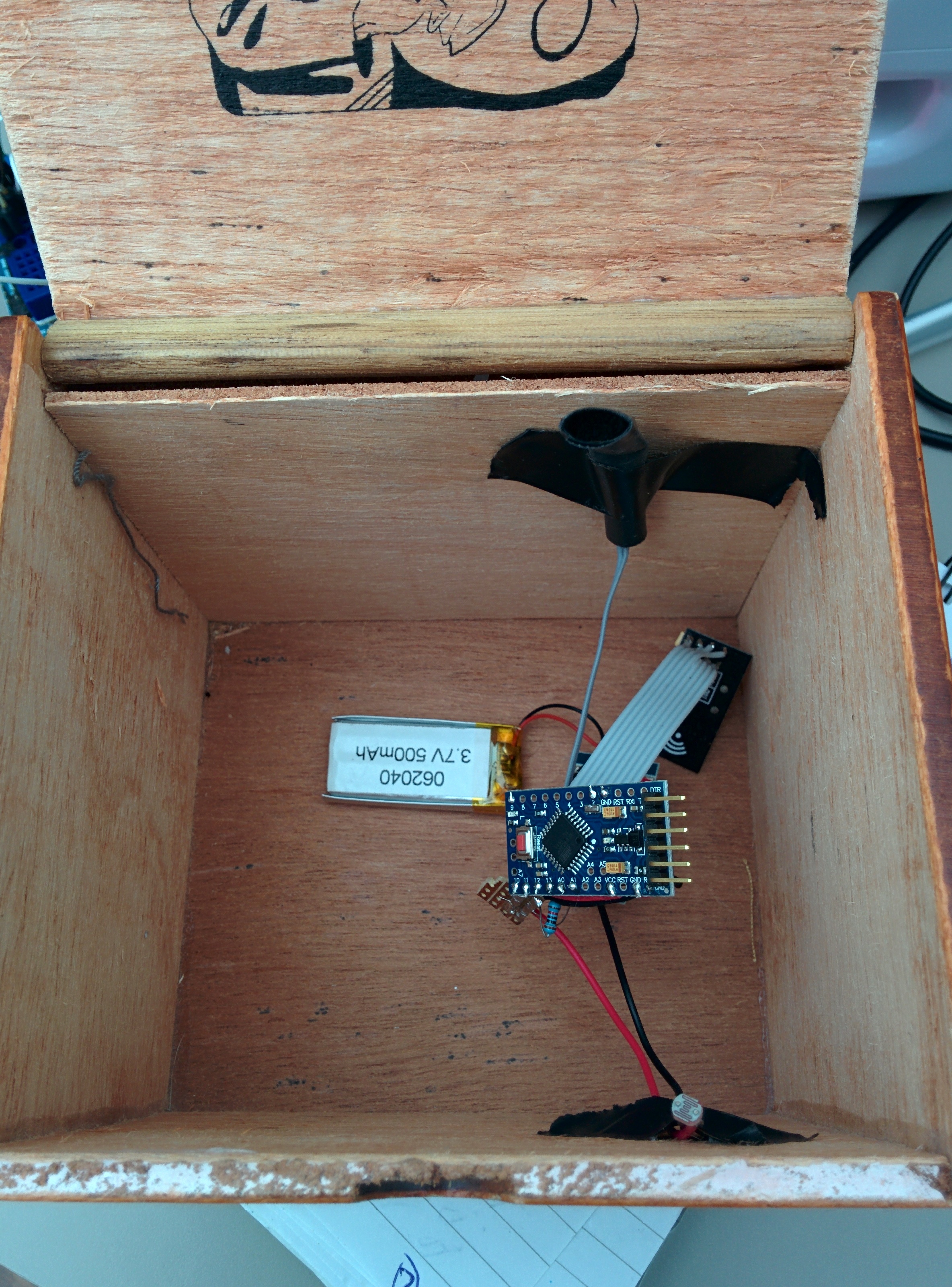

So... with all this in mind we have started developing MAMI, Modular Accessible Musical Instrument. This is kind of a mammoth undertaking in catering for any kind of input, various outputs and making it extremely flexible and routable so we will ultimately focus on our own immediate usage requirements, but with a view to making something that can be built upon long term. At the point of writing I have built a system with which you can dynamically create devices and assign input receivers within those devices, I have focussed on Human Interface (joysticks, keyboards, games controllers etc) devices so far and am moving on to Serial input. I am viewing every controller as having combinations of analogue (sliders, knobs, joysticks, pressure sensors etc) and digital (buttons, keys etc).

Needless to say, it will be a while before a first release !